Reasons JavaScript projects become unmaintainable – problems and solutions.

Software doesn't wear out – it tends to collapse under the weight of its own tech debt. Years, or even just months, of ad hoc revisions, delivery prioritised above engineering, team changes, lack of clear structure and documentation contribute to codebases which become increasingly difficult to comprehend and maintain.

Teams servicing tech debt tend to have low morale and high attrition rates as well as long onboarding times as new developers learn the quirks, pitfalls and “where the bodies are buried” in the tangle of code.

In hard-coupled systems one collapsing element can pull the whole app down with it.

Most web applications have similar desires and outcomes – a data service is fetched, rendered in a readable and aesthetically pleasing format. User input allows the data to be filtered, manipulated and updated. A new output is rendered.

With these goals in mind there are different approaches to achieving the same ends. It is wise to find an approach which is maintainable and future proof.

Problem: Increasing complexity

Software evolves and without vigilance adding additional features and extending additional ones may result in a code base which increases in complexity.

It is necessary to be able to incorporate new features without muddying the waters of the existing code-base. Without adequate diligence when adding new features dependencies can become hard coupled and code can become long, convoluted and difficult to reason with.

Complexity hampers understanding and slows development and drains developers’ spirit.

Solutions: Pre-plan, Refactor, and code-split

When adding a new feature ascertain if it may be added in isolation to reduce coupling with existing features. If augmenting or adding to an existing feature or function(s) use it as an opportunity to review and, if necessary, refactor the existing code. Break down long, elaborate and convoluted functions into shorter ones and add tests. Long functions have a high cognitive load and may encapsulate untested code. Consider taking a functional programming approach, breaking longer problems into several shorter ones, consisting of ‘pure functions’, functions without external side-effects, which may be tested in isolation. Incorporate time to revisit and refactor code in each sprint.

Problem: Technological change

Tech moves at an increasingly rapid pace and code bases, once gleaming examples of bleeding edge technology, become obsolete. Frameworks, tooling, build and dependency management can all be superseded by newer versions. This has a number of issues: Does anyone have the knowledge of motivation to work on that Angular v1 application with its Grunt tasks and Bower dependencies? Is the old tech-stack still supported? New tech is inevitably an improvement on the old in ease of use, performance and features.

Solutions: Defensive coding, modularisation, refactoring and documentation

Knowing you are writing tomorrow's legacy code, do everything to prepare for it. Decouple code and make it as modular as possible to reduce dependencies and allow obsolete code to be updated. Where possible write ‘vanilla’ JavaScript – un-reliant on frameworks or libraries which will become obsolete. Avoid excessively elaborate build processes which tie the code base to specific tooling and build pipeline. Tooling can be one of the most complex parts of modern JavaScript code-bases – keep WebPack/Typescript configs etc as lean and well documented as possible and try not to do too much heavy-lifting with the build process, eg: localisation or A/B test versions. This is even more important in mono-repos where there may be more than one build process and, potentially, dependencies between build processes. Document the overall architecture of the application. There will come a day when all or part needs rebuilding. Make sure there are blueprints to work from.

Problem: Accumulated tech debt

I have worked on more than one project, for large, well known companies, where the codebase has evolved over time with little overarching planning, worked on by a myriad developers and contractors with a half grasp of the code. These are the codebases which eventually topple under the weight of hard coupled additions and freeform structure.

Delivery cadence becomes increasingly slow, dragged down by the baggage of tip-toeing around fragile, interconnected code afraid of breaking one thing and pulling down the rest. The endless reverse engineering and the cognitive load of learning the quirks of complex code bases further puts the brakes on delivery.

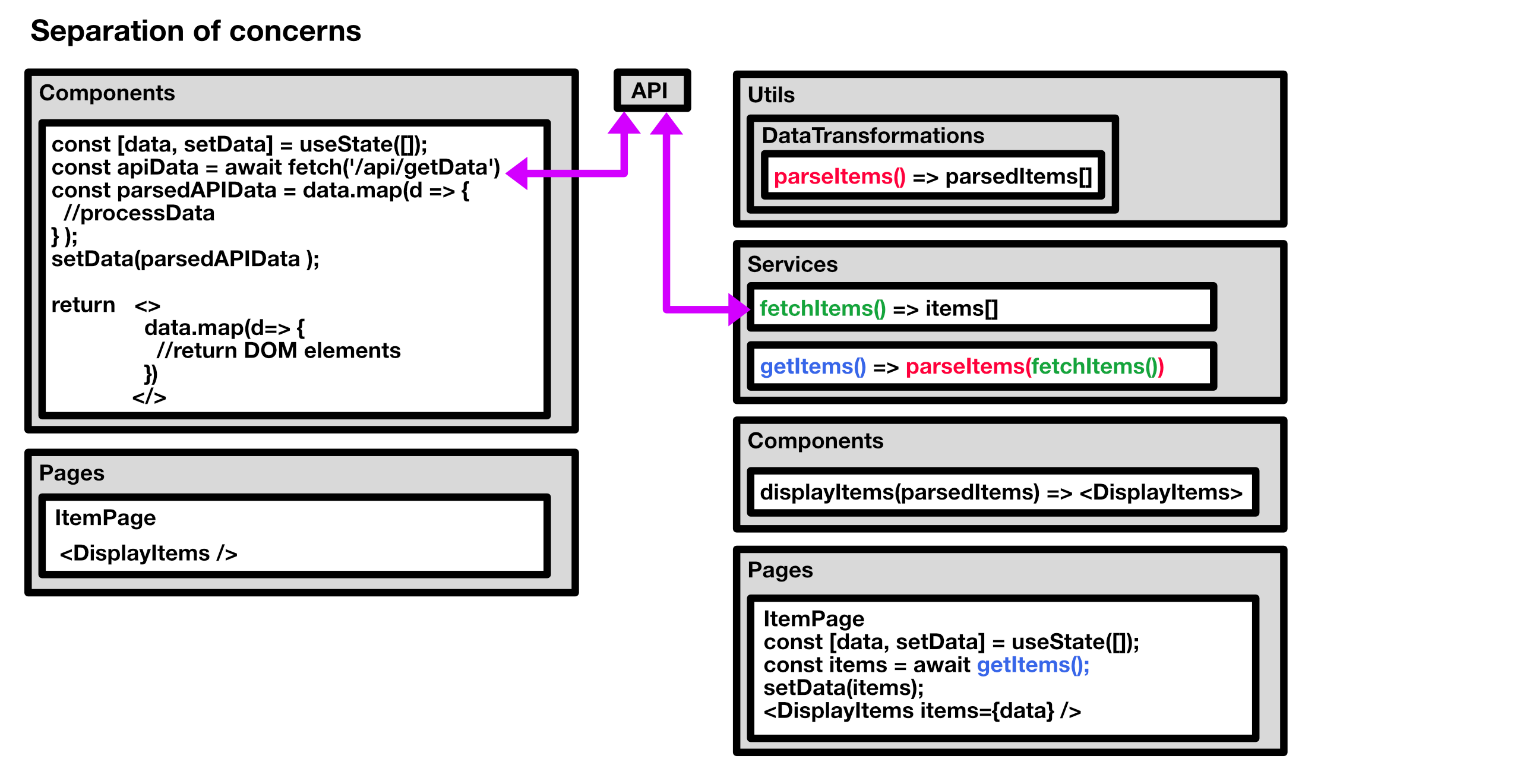

A big part of the issue is code being ‘busked’ – rapidly building something which works but may wrap a lot of concerns in a single package, eg: a display component which fetches data from an API, manipulates that data in some way then displays it, all in one long function. When there is no agreed overarching structure, eg: a place for display components, services and business logic it’s up to each developer to choose their own adventure when it comes to where their code sits in the grand scheme of things.

Solution: Separate concerns, opinionated structures and code review

Ideally a code base should look like it has a single author. At the individual file level conventions may be embedded by using linting to enforce code style. At the application level this may be enforced by adopting an ‘opinionated’ structure – one not open to negotiation or interpretation but a set of rules to be adhered to.

When planning an application identify the constituent parts and separate concerns from the outset – services, state-management, business logic and UI should all be separate concerns – keep them in separate directories.

Opinions vary on the best file structure but something like: src - Components – individual building block components and larger components composed of the smaller building blocks Pages – full pages, composed of components Context – Providers for global context Services – calls to the API Hooks – various state, context and effect hooks

An opinionated structure speeds the onboarding of new developers. When there is a place for everything and everything is in its place – services, components, business logic, utilities, data-transformers, tests – it becomes simpler to track down where existing code lives and new code belongs. There is no real linting for structure, enforcement of application structure is down to code review and clear guidelines for developers.

The rise of the framework and hard coupling

Building websites and apps from scratch is hard and as technology marched on from the early days of simple HTML it got harder. This prompted the rise of the frameworks, package deals where you got your Content Management System (CMS) and User Interface(UI) as a package deal. WordPress, Joomla and Drupal led the pack offering an off-the-shelf solution and, for a while, WordPress in particular, seemed set to take over the web.

The issue with these frameworks is that they hard coupled all their concerns: CMS, Database, back and front end, display and logic. On top of that they were often accused of being ‘cookie-cutter’ in their approach, making assumptions about the likely structure you will impose on your site. There is a perceived advantage in using CMS frameworks in that the UI may be refreshed whilst keeping the same back-end and data. In truth technology moves at a pace and updating versions of these frameworks is a chore. The alternative is to stick with the legacy back-end, but then finding developers willing and able to support legacy systems becomes increasingly difficult. In addition, the output from these frameworks is invariably HTML, tying the site/application to devices and browsers capable of displaying web technology.

In the JavaScript world too frameworks took off, Ember, Backbone, Angular all offered data fetching, binding and state management. JavaScript frameworks, by their nature, are more decoupled as they are purely concerned with rendering the UI, fetching data via back end web services. Some still had a degree of hard coupling, tending toward a Model, View, Controller (MVC) pattern, unifying state management and data fetching in a single library.

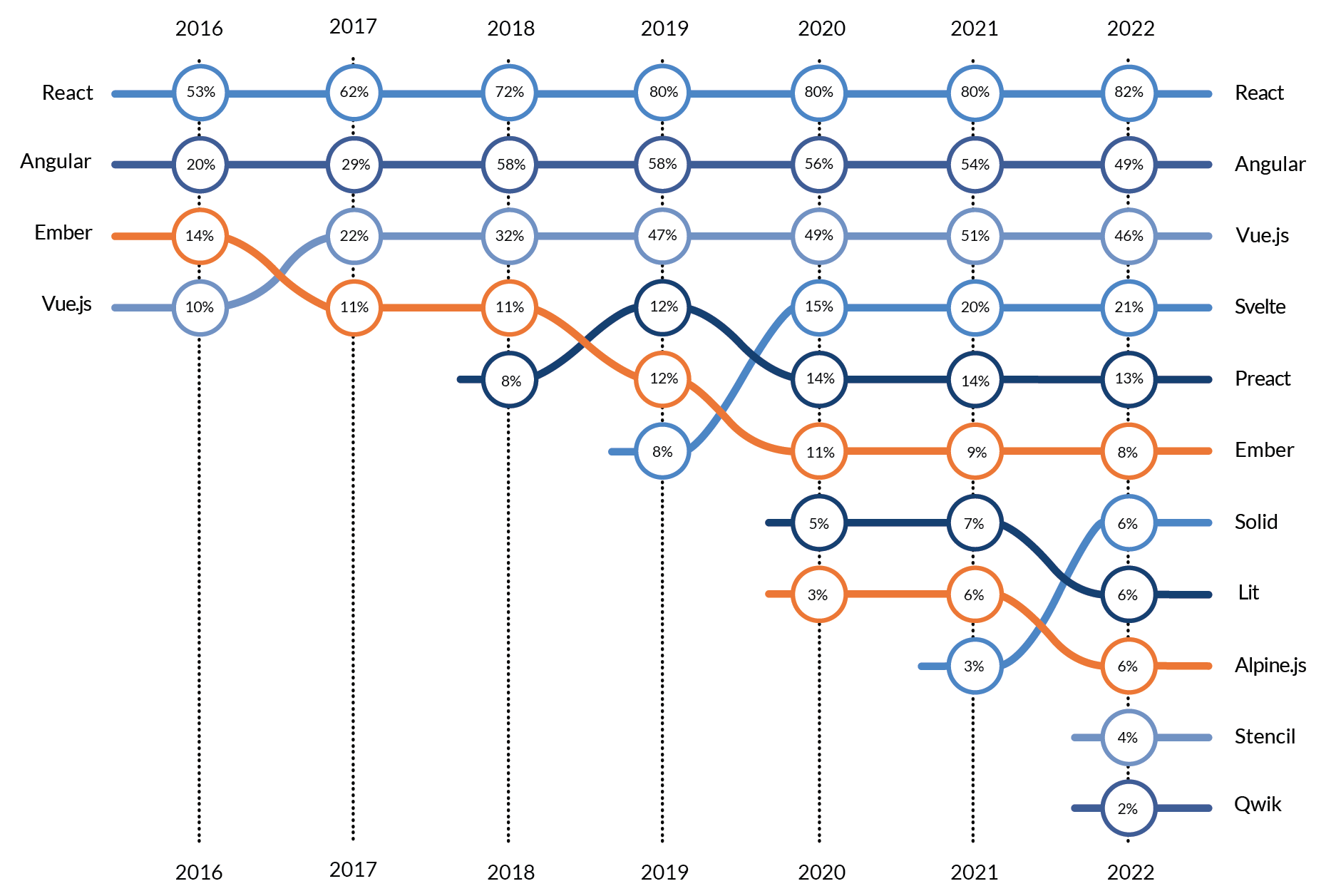

React is, at present, the most popular JavaScript library for UI development. It isn’t, however, a full framework, it is simply a library for creating UI components. It is decoupled from the business logic of state-management and data fetching. Its advantage is it does less, simply focussing on the UI aspect. The JS framework league table React’s popularity may be put down to a number of factors: speed of rendering; ease of use; modularity. One of the main reasons is its laser focus on just being a Component library – you choose your own preferred method of state-management, routing, styling, testing, data-fetching. This allows state-management, services etc to be abstracted into their own, discreet, reusable, framework-agnostic domains. It also allows React components to be built in a decoupled, modular, reusable way. If a service or component needs refactoring or replacing they are not tied to the application as a whole. It's a kit of parts.

React also has a huge ecosystem of libraries to draw on, from charting and graphing, data fetching and 3D, a large base of developers who know how to use it and a massive amount of applications built in it which require new features or maintenance. It could be argued newer libraries, like Solid.js, address some of the shortcomings of React and perform better – their problem remains they have captured a fraction of the market and lack the plethora of libraries, plugins and developers React has garnered.

That said, UI code is short lived and if an application has a robust API it should not be an onerous task to re-skin an application, so don’t be discouraged from at least test-driving the less popular frameworks.

Source Krusche & Company GmbH https://kruschecompany.com/popular-javascript-frameworks-and-libraries